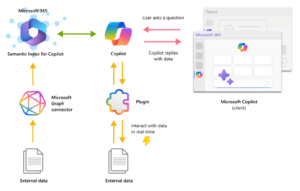

In the dynamic realm of data-driven decision-making, access to relevant, accurate, and timely data is paramount. Copilot Data Connectors are revolutionary, linking various data sources to assist in your evaluation.

This guide discusses Copilot Data Connectors. It explains how to use them, why they are important, and how they improve data workflows.

Copilot Data Connectors are innovative tools designed to simplify and streamline data integration processes.

They help users easily access data from different sources like cloud platforms, databases, and applications. Copilot Data Connectors make it easier to retrieve and integrate data. This allows users to concentrate on gaining valuable insights rather than managing separate data sources.

ensuring compatibility and efficiency.

ensuring compatibility and efficiency.To get the latest data for analyzing and reports, you can choose to turn on Real-Time Syncing for continuous updates. This will keep your insights up-to-date with the latest data from your sources.

You can connect the data with your favorite analytical tools. These tools include business intelligence dashboards, machine learning models, and custom applications. This allows for seamless integration and analyzing.

Integrating external data with Copilot extends the scope of analyzing, enabling organizations to gain a holistic view of their operations, customers, and competitive landscape.

Here's why connecting external data with Copilot is crucial:

External data sources provide valuable context that enriches internal datasets. Companies can improve their understanding of customers, competition, and opportunities by using external information.

This information can include market trends, consumer behavior, and industry standards. By analyzing this data, companies can gain insights into what customers desire and potential risks they may face.

Having access to different types of outside information helps make decisions based on real-world situations. Organizations can make better decisions by using external data. For example, they can adjust marketing plans based on social media feedback.

They can also enhance supply chain operations with weather predictions. Ultimately, this leads to improved business results. This leads to better business results.

External data often contains signals and patterns that can enhance predictive analytics and forecasting models. Companies can improve their predictive models by using outside information such as economic data, population trends, and global events.

This can make their models more accurate and reliable. As a result, companies can plan and manage risks more effectively. This helps with planning and managing risks more effectively.

Leveraging external data can provide a competitive edge by uncovering hidden opportunities or early warning signs of potential risks.

By using Copilot, organizations can stay ahead by spotting market trends early and tracking competitors online. Integrating external data helps them stay ahead of the competition.

External data integration fosters innovation by enabling organizations to tap into new sources of insights and inspiration. Companies can find new market groups by analyzing data from outside sources. Additionally, they can generate new ideas for products and services.

External data sources play a critical role in risk management by providing early indicators of potential risks and vulnerabilities.

With Copilot, companies can use external data to anticipate and manage risks like supply chain problems and regulatory shifts. This helps prevent issues from getting worse.

Organizations can use Copilot to connect external data sources. This allows them to access a variety of data-driven insights. You can use these insights for decision-making, planning, and innovation.

By doing this, organizations can enhance their logical capabilities. Organizations can use outside data to discover new opportunities, lower risks, and stay ahead in today's fast-paced environment.

Copilot Data Connectors streamline the data integration process, reducing manual effort and time spent on data preparation tasks. This efficiency enables data teams to focus on higher-value activities such as analyzing, modeling, and strategic decision-making.

By bridging data silos and simplifying access to disparate data sources, Copilot Data Connectors democratize data access across organizations. Business users, analysts, and data scientists can get the data they need without needing specialized technical skills.

Connecting external data with Copilot enables you to grow with your changing data needs, providing scalability and flexibility. Whether you're dealing with small-scale datasets or massive data volumes, Copilot's robust infrastructure ensures seamless growth and performance.

Copilot Data Connectors provide real-time insights for organizations. They help organizations access fresh data quickly. This allows them to make decisions faster. It also helps them respond promptly to market changes.

Copilot Data Connectors support a wide range of data platforms and formats, ensuring compatibility with your existing infrastructure and tools. This interoperability eliminates data silos and fosters a cohesive data ecosystem within your organization.

In conclusion, Copilot Data Connectors represent a paradigm shift in data integration, offering unparalleled efficiency, accessibility, and scalability.

Your organization can use connector tools to easily connect data with Copilot for maximum data utilization. This helps them innovate and strategically grow in today's data-focused environment.

In this in-depth guide for purchasing Copilot you'll learn about every licensing option available in detail, along with the demographics each option best suits to choose the perfect plan for you.

“The company discovered there were different “types” of Copilot users. One segment includes creators, researchers, and programmers who want rapid access to the latest generative AI tools.

Another segment includes Microsoft 365 customers who want to leverage Copilot in their apps for personal use. Plus, there are Copilot users who need access to the complete capabilities of Copilot, alongside enterprise data protection, Microsoft Graph grounding, and Copilot in Teams.

As a result, there are now different “Copilot plans” available for each type of user, starting with the introduction of Microsoft Copilot Pro”. -- Android Authority

Freelancers and Hobbyists: Copilot’s Free version is the perfect fit for freelancers and hobbyists who work on projects intermittently. This option allows individuals to access Copilot's capabilities without committing to a fixed monthly subscription, making it cost-effective and highly accessible for users who don’t yet have the need for Copilots complete suite of services.

Small to Medium-Sized Businesses (SMBs): SMBs with Microsoft 365 Business Standard or Business Premium subscription and small to medium-sized development teams can benefit from Copilot Pro or Microsoft Copilot for Microsoft 365.

With its predictable monthly subscription fee per user, Copilot Pro offers unlimited access to Copilot's features, enabling teams to collaborate effectively and increase productivity without worrying about variable costs.

If the organization is working heavily with Microsoft 365, then Copilot for Microsoft 365 is the best option to reap all of Copilots benefits within their existing M365 platform.

Large Enterprises: Large enterprises with Microsoft 365 E3 or E5 and complex development environments and stringent security requirements can leverage Copilot for Microsoft 365.

Customizable pricing and features, along with advanced security controls and dedicated support, make Copilot for Microsoft 365 ideal for organizations seeking enterprise-grade solutions tailored to their specific needs.

The "free" Copilot experience for individuals grants access to foundational capabilities, web grounding, and commercial data protection. However, users do not have access to Copilot features within tools like Word, PowerPoint, or Teams.

Free users can utilize Copilot in specific solutions, such as Bing Chat and Bing Chat Enterprise.

Considering upgrading from the free version of Copilot to the monthly Copilot Pro subscription for $20? If you're a casual Copilot user and satisfied with the performance and features of the free version, there's no pressing need to upgrade.

However, if you frequently use Microsoft Office applications, the decision becomes more nuanced. Copilot Pro unlocks AI features within Office, particularly in apps like PowerPoint, Excel, and Outlook, which can be truly transformative.

The ability to command Copilot to assist in creating a PowerPoint presentation based on a Word document or email is remarkable and can significantly save time.

If such capabilities align with your needs and preferences, then opting for a Copilot Pro subscription is advisable. While $20 per month may seem steep, we anticipate that Copilot Pro will continue to evolve with additional features over time, potentially increasing its value in the future.

The Copilot Free Plan is particularly beneficial for individuals and small teams who have occasional or moderate needs for AI-driven assistance in software development but do not require access to advanced features or integration with Microsoft Office applications.

Specifically, the following demographics would benefit the most from Copilot's Free Plan:

Freelancers and Hobbyists: Individuals who work on coding projects independently or as a hobby can benefit from the Copilot Free Plan as it provides foundational AI capabilities without the need for a paid subscription.

Students: Students learning to code or working on coding projects for academic purposes can leverage the Copilot Free Plan to access AI-powered coding assistance at no cost.

Small Development Teams: Small teams with limited resources or budgets can utilize the Copilot Free Plan to enhance their coding productivity without incurring additional expenses.

Startups and Bootstrapped Companies: Startups and bootstrapped companies in their early stages of development may find the Copilot Free Plan advantageous as it offers basic AI assistance without requiring a financial commitment.

Overall, the Copilot Free Plan caters to individuals and organizations looking to explore AI-driven coding assistance without the need for advanced features or integration with Microsoft Office applications.

The Copilot Pro Plan is best suited for users and organizations with more extensive needs, particularly those who rely heavily on Microsoft Office applications for their work.

The following demographics would benefit the most from Copilot's Pro Plan:

Professional Developers: Experienced developers and software engineers who work on complex projects and require advanced AI-driven assistance, such as code suggestions, refactoring, and bug fixing, would benefit greatly from the Copilot Pro Plan.

Microsoft Office Users: Individuals and teams who heavily utilize Microsoft Office applications like Word, PowerPoint, Excel, and Outlook in their workflow can unlock the full potential of Copilot's integration with Office applications with the Pro Plan. This includes features such as generating PowerPoint presentations based on Word documents or emails, which can significantly save time and enhance productivity.

Companies with Compliance and Security Needs: Enterprises with strict compliance and security requirements can benefit from the advanced security controls and dedicated support offered by the Copilot Pro Plan, ensuring that their coding activities remain secure and compliant with industry standards.

Teams Requiring Priority Support and Collaboration Tools: Organizations that require priority support and advanced collaboration tools, such as centralized billing and management, can take advantage of the Copilot Pro Plan to enhance team collaboration and productivity.

For users wanting to incorporate Copilot into their Microsoft 365 infrastructure, there are many different options to explore which we have broken down below:

For individuals or families subscribed to Microsoft 365 Personal or Family, Copilot Essentials provides access to essential AI-powered features within select Microsoft 365 apps.

With Copilot Essentials, users can enjoy priority access to Copilot and experience faster performance. Additionally, Copilot Essentials accelerates image creation with Designer, making it an ideal choice for personal or household use.

Individuals or families seeking enhanced productivity within select Microsoft 365 apps.

Casual users who do not require extensive AI capabilities but value improved performance and image creation features.

Designed for customers with a Microsoft 365 Business Standard or Business Premium subscription, Copilot for Microsoft 365 Business Essentials offers a comprehensive set of AI-powered tools tailored for small to medium-sized businesses.

Users gain access to AI-powered chat with secure access to organizational graphs, enabling efficient communication and collaboration. Additionally, Copilot is seamlessly integrated into Microsoft 365 apps such as Word, Excel, PowerPoint, Outlook, and Teams, enhancing productivity across the organization.

With the ability to customize and extend Copilot using Microsoft Copilot Studio Preview, businesses can adapt the AI assistant to meet their unique needs. Furthermore, Copilot for Microsoft 365 Business Essentials provides enterprise-grade security, privacy, and compliance features, ensuring data protection and regulatory compliance.

Small to medium-sized businesses requiring AI-powered tools for improved communication, collaboration, and productivity.

Organizations seeking enterprise-grade security and compliance features within their AI assistant.

For customers with a Microsoft 365 E3 or E5 subscription, Copilot for Microsoft 365 Enterprise Solutions offers advanced AI capabilities tailored for large enterprises.

In addition to AI-powered chat and access to Copilot in Microsoft 365 apps, enterprise users benefit from customizable AI solutions through Microsoft Copilot Studio Preview.

With enterprise-grade security, privacy, and compliance features, Copilot for Microsoft 365 Enterprise Solutions ensures data protection and regulatory compliance at scale.

Large enterprises with complex workflows and extensive AI requirements.

Organizations seeking highly customizable AI solutions with robust security and compliance features.

On March 1st of 2024, Microsoft released the following additional licensing options for Copilot. These licenses for Copilot Sales and Copilot Service will be available for CSP.

Read the graph below to learn more or contact our procurement team at quotes@managedsolution.com.

Choosing the right Copilot pricing plan depends on your specific needs and the Microsoft 365 subscription you currently hold. Whether you're an individual user, a small business, or a large enterprise, there's a Copilot plan tailored to enhance your productivity, collaboration, and security within the Microsoft 365 ecosystem.

We hope this guide for purchasing copilot will help you evaluate your requirements carefully and select the Copilot plan that best aligns with your goals and priorities.

If you're ready to unlock the full potential of AI-driven productivity tools within Microsoft 365, reach out to our procurement team at quotes@managedsolution.com to learn more, and explore the Copilot pricing plans today with one of our experts.

How ConnectWise Sidekick combines AI and Managed Services to enhance businesses everywhere.

Love is in the air, and at Managed Solution, there’s one thing we’re head over heels for: ConnectWise Sidekick™. As we celebrate the season of love, let us break down the incredible features of this new powerful tool and its many benefits.

Hyper-automation and artificial intelligence come together seamlessly in ConnectWise Sidekick. This powerful tool automates the triage, summarization, and resolution suggestion processes, leading to quicker service ticket resolutions and consistently exceptional customer experiences.

By optimizing ticket management, ConnectWise Sidekick empowers technicians to operate with efficiency and effectiveness. Moreover, it centralizes information access within Microsoft Teams, facilitating streamlined updates across all teams.

ConnectWise Sidekick isn’t just about functionality; it’s also about financial prudence. The cost savings per technician help us manage our budget effectively, ensuring that our resources are utilized optimally.

Specifically, businesses save $1,042 per month by implementing ConnectWise’s Sidekick. Thanks to the power of AI organizations can achieve greater productivity at faster rates and limit the need for internal labor. Optimizing for both growth and overall spend.

ConnectWise Sidekick isn’t just beneficial for MSP’s like Managed Solution; it also extends its advantages to the broader TSP community. Its impact goes beyond our organization, fostering a network of collaboration and support among peers and clients.

ConnectWise Sidekick simplifies workflows with its automation capabilities. It handles routine processes seamlessly, allowing users to focus on tasks that require expertise.

ConnectWise Sidekick helps minimize unnecessary expenses by optimizing resource utilization. Its cost-saving features enables maintenance operational efficiency without compromising on quality.

ConnectWise Sidekick enables swift, accurate solutions to businesses. Its AI-driven insights ensure that teams receive the support they need promptly and effectively.

ConnectWise Sidekick seamlessly integrates with ConnectWise PSA, enhancing the functionality of our platform. Its advanced features enrich our PSA experience, making it more robust and reliable.

ConnectWise Sidekick streamlines our operations by automating manual tasks. Its efficient workflow management allows us to optimize our time and resources effectively.

ConnectWise Sidekick provides valuable insights derived from our PSA data. These insights empower us to make informed decisions that contribute to our clients' satisfaction and our business growth.

In conclusion, ConnectWise Sidekick isn’t just a tool; it’s a strategic asset that enhances operations by combing the power of AI and Managed Services. Its efficiency-driven approach, cost-saving features, and collaborative benefits make it an invaluable addition to our toolkit.

Ready to fall in love with the full potential of ConnectWise Sidekick? Contact us today and let’s explore the possibilities together.

In today's era of digital transformation, businesses recognize the strategic importance of AI implementation (Artificial Intelligence) to maintain a competitive edge.

Managed Solution, a trailblazer in innovative technology solutions, introduces a robust six-stage methodology designed to seamlessly integrate AI into customer environments. The steps of Collect, Cleanse, Condition, Comply, Compute and Connect are important for successfully integrate AI.

The 'Collect' stage focuses on identifying and consolidating data scattered across various sources. This initial step ensures a comprehensive understanding of the data landscape, laying the groundwork for a successful AI implementation.

The 'Cleanse' stage focuses on data quality by removing errors, duplicates, and ensuring accurate and reliable data. A robust cleansing process is crucial for obtaining meaningful and actionable AI-driven insights.

In the 'Condition' stage, Managed Solution ensures the implementation and updating of information protection policies. This involves measures to safeguard data integrity, confidentiality, and availability, establishing a secure data environment crucial for successful AI implementation.

The 'Comply' stage goes beyond protection to compliance. We label and classify data to follow rules and regulations. This step is particularly vital for industries with strict compliance requirements, such as healthcare and finance.

The 'Compute' stage involves leveraging Microsoft Graph and Microsoft 365 Applications to seamlessly integrate data sources with AI systems. This integration is pivotal for creating a unified data environment that facilitates efficient AI-driven insights.

The final 'Connect' stage marks the culmination of Managed Solution's methodology. Algorithms smoothly incorporate AI skills into the customer's setup, improving responses and predictions.

By leveraging the power of AI, organizations can unlock valuable insights, automate processes, and make informed decisions. Managed Solution's six-stage methodology offers a comprehensive framework for organizations looking to seamlessly implement AI in their environments.

Every step is crucial for a smooth transition to an AI-driven future. This includes gathering data, following rules, and implementing AI. Businesses can fully utilize AI, innovate, and stay ahead in technology by following this methodology.

Our vision for Microsoft Copilot is to bring the power of generative AI to everyone across work and life. Customers like Visa, BP, Honda, and Pfizer, and partners like Accenture, KPMG, and PwC are already using Copilot to transform the way they work, and 40% of the Fortune 100 participated in the Copilot Early Access Program.

We are updating our Microsoft Copilot product line-up with a new Copilot Pro subscription for individuals; expanding Copilot for Microsoft 365 availability to small and medium-sized businesses; and announcing no seat minimum for commercial plans—making Copilot generally available to individuals, enterprises, and everyone in between.

Read on for all the details.

Copilot for Microsoft 365 enables users to enhance their creativity, productivity, and skills.

First, we are announcing an update to our Copilot product line-up: Copilot Pro—a new Copilot subscription for individuals priced at $20 per individual per month.

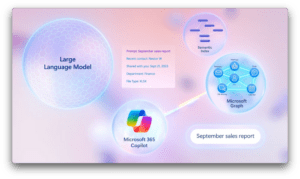

Copilot Pro has foundational capabilities in a single experience that runs across your devices and understands your context on the web, on your PC, across your apps, and soon on your phone to bring the right skills to you when you need them. And it has web grounding, so it always has access to the latest information. When you’re signed into Copilot with your Microsoft Entra ID, you get commercial data protection for free—which means chat data isn’t saved, Microsoft has no eyes-on access, and your data isn’t used to train the models.

Copilot Pro provides priority access to the very latest models—starting with OpenAI’s GPT-4 Turbo. You’ll have access to GPT-4 Turbo during peak times for faster performance and, coming soon, the ability to toggle between models to optimize your experience how you choose. Microsoft 365 Personal and Family subscribers can use Copilot in Word, Excel (currently in Preview and English only), PowerPoint, Outlook, OneNote on PC, and soon on Mac and iPad. It includes enhanced AI image creation with Designer (formerly Bing Image Creator) for faster, more detailed image quality as well as landscape image format. And Copilot Pro gives you the ability to build your own Copilot GPT—a pre-customized Copilot tailored for a specific topic—in our new Copilot GPT Builder (coming soon) with just a simple set of prompts.

MICROSOFT COPILOT STUDIO

While Copilot Pro is our best experience for individuals, Copilot for Microsoft 365 is our best experience for organizations. Copilot for Microsoft 365 gives you the same priority access to the very latest models. You get Copilot in Word, Excel, PowerPoint, Outlook, OneNote, and Microsoft Teams—combined with your universe of data in the Microsoft Graph. It has enterprise-grade data protection which means it inherits your existing Microsoft 365 security, privacy, identity, and compliance policies. And it also includes Copilot Studio, so organizations can customize Copilot for Microsoft 365 and build their own custom copilots and plugins as well as manage and secure their customizations and standalone copilots with the right access, data, user controls, and analytics.

Here’s a summary of the updated Copilot product line-up:

THE COPILOT SYSTEM

To empower every organization to become AI-powered, we are making three changes. First, we are removing the 300-seat purchase minimum for Copilot for Microsoft 365 commercial plans. Second, we are removing the Microsoft 365 prerequisite for Copilot—so now, Office 365 E3 and E5 customers are eligible to purchase. We’re also extending Semantic Index for Copilot to Office 365 users with a paid Copilot license. Semantic Index works with the Copilot System and the Microsoft Graph to create a sophisticated map of all the data and content in your organization—enabling Microsoft 365 Copilot to deliver personalized, relevant, and actionable responses. Third, we are excited to announce that Copilot for Microsoft 365 is now generally available for small and medium-sized businesses—from solopreneurs running and launching their first business to 300-person fast-growing startups. If you are using either Microsoft 365 Business Standard or Microsoft 365 Business Premium, you can now purchase Copilot for Microsoft 365 for $30 per user per month.1

Small and medium-sized businesses are the heart of every community and the lifeblood of local economies. They have an outsized impact on the world and markets they support—in the United States, this category accounts for 99.9% of business and employs nearly half of the workforce.2

These businesses stand to gain the most from this era of generative AI—and Copilot is uniquely suited to meet their needs. Small and medium-sized business owners report that communicating with customers takes up most of their time (66%), with managing budgets (50%) and administrative tasks (48%) not far behind.3 Copilot for Microsoft 365 can help reduce this daily grind, giving business owners valuable time back to focus on what matters most: growing their business. And with the Microsoft Copilot Copyright Commitment, small business owners can trust that they are working with a reliable partner. Small and medium-sized business customers that have Microsoft 365 Business Standard or Business Premium can learn how to purchase Copilot for Microsoft 365 via our website or by contacting a partner.

We have been learning alongside small and medium-sized business customers in our Copilot Early Access Program. Here’s what they’re saying about how Copilot for Microsoft 365 is already transforming their work.

“I love that it’s in our environment. It’s able to cross-pollinate and gather information from all of the data we’ve got in Microsoft 365. As a business owner, that’s really important to me because it keeps our people working inside our systems.”

—James Hawley, CEO and Founder at NextPath Career Partners

“I do believe that there isn’t a single job position in the company that won’t benefit in some way from Copilot being available to them.”

—Alex Wood, Senior Cloud Engineer at Floww

“Copilot accurately summarizes the call and meeting notes in minutes. That’s not just faster, it means callers can add more value to the discussion rather than just take notes.”

—Philip Burridge, Director, Operations and Strategy at Morula Health

Commercial customers—including small and medium-sized businesses—can now purchase Copilot for Microsoft 365 through our network Cloud Solution Provider partners (CSPs). This means Copilot for Microsoft 365 is now available across all our sales channels. CSPs have served as trusted advisors to their customers, unlocking profitability for businesses and expanding their own capabilities. Learn more about Cloud Solution Provider partners.

These announcements come just one month after we announced that we are making Copilot for Microsoft 365 generally available to education customers with Microsoft 365 A3 or A5 faculty, and we’re expanding that to include Office 365 A3 or A5 faculty with no seat minimum. While education licenses are not yet included in the CSP expansion announced today, we will share updates in the coming months. It’s all part of our vision to empower everyone—from individuals to global enterprises—for an AI-powered world.

Learn more about Microsoft Copilot and visit the Copilot for Work site for actionable guidance on how you can start transforming work with Copilot today.

Learn more about how Copilot for Microsoft 365 for small and medium-sized businesses, including next steps licensing and technical requirements, and get familiar with Copilot capabilities.

Visit WorkLab for critical research and insights on how generative AI is transforming work.

Copilot helps you achieve things like never before using the power of AI.

1Copilot for Microsoft 365 may not be available for all markets and languages. To purchase, enterprise customers must have a license for Microsoft 365 E3 or E5 or Office 365 E3 or E5, and business customers must have a license for Microsoft 365 Business Standard or Business Premium.

Copilot is currently supported in the following languages: English (US, GB, AU, CA, IN), Spanish (ES, MX), Japanese, French (FR, CA), German, Portuguese (BR), Italian, and Chinese Simplified. We plan to support the following languages (in alphabetical order) over the first half of 2024: Arabic, Chinese Traditional, Czech, Danish, Dutch, Finnish, Hebrew, Hungarian, Korean, Norwegian, Polish, Portuguese (PT), Russian, Swedish, Thai, Turkish, and Ukrainian.

2U.S. Small Business Administration. (2023). Frequently Asked Questions.

3Wakefield Research. (2023). Microsoft study: Small businesses intrigued by AI and the opportunities it brings.

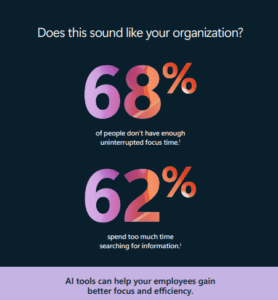

How is your business preparing for AI?

The way we now work—defined by an era of digital transformation—is evolving at such a pace that keeping up has become a challenge for many organizations. Business leaders are recognizing the enormous opportunity that modern work solutions like AI tools present and are now exploring ways to use them to their best advantage.

That means implementing AI in a way that allows employees to be more engaged and productive, focusing on the work that matters most.

AI helps your employees offload time-consuming tasks by jumpstarting the creative process, analyzing trends, and summarizing email threads and key discussion points from meetings. Less time spent on more tedious tasks grants employees the freedom to focus on the work that matters most.

Organizations that made employee engagement a top priority have performed twice as well financially as businesses that deprioritized an engaged workforce (2). And AI is poised to empower employees to be more engaged through opportunities for creativity, skill building, and increased productivity.

Imagine a workforce driven by the powerful combination of irreplaceable human ingenuity and skill with the innovative capabilities of a secure, user-friendly AI system. With the right tools and mindset, any organization can embrace the transformation to an AI-powered workforce.

With all the potential that working with AI holds, it’s critical to remember to take an approach of preparing for AI that prioritizes fairness, transparency, and accountability. Microsoft is a leader in the development and implementation of AI systems, which includes creating guidance on principles and best practices for responsible AI use—and ensuring those principles are at the foundation of Microsoft’s AI technology. Only with responsible AI implementation can your organization unlock the full potential of what’s possible with AI.

How do you know your organization is prepared for AI?

The platform shift to AI isn’t one that can be made without clear leadership and focused investment. Implementing AI technology can be complex, especially without a well-defined plan in place. It requires a culture of productivity and collaboration, a secure foundation for endpoint management, and the willingness to embrace change in order to outpace it.

What does AI readiness look like? An organization ready to embrace AI understands its potential as a strategic asset, how it can be used to benefit the business, and the ability to guide employees and customers in responsible AI practices.

Assessing your readiness level is the first step toward transforming your organization into one that can outpace the changes and challenges of this new era of working with next-generation technologies like AI.

To help you begin the transformation to an AI-powered organization, we’ve compiled a quiz with questions aimed at evaluating readiness level.

The quiz is in five parts and assesses your organization's:

Choose the answer that sounds most like where your organization currently stands and keep a tally of your answers as you go. At the end of the quiz, you’ll be able to estimate whether your organization is Very Ready, Almost Ready, or Needs More Guidance in preparing for AI to begin the transformation to an AI-powered workforce.

Rate your organization’s openness to change and innovation.

How confident are you that your current technology empowers your employees to be productive?

How fundamental is AI to the success of your business?

Rate your employees’ current willingness to adopt new technologies, including AI

How important is it to provide secure, user-friendly AI systems to your workforce?

How much, if at all, has your organization’s leadership bought into the business value of AI-powered tools?

How strong is your investment in change management and training to support the transition to AI-powered processes and systems?

Does your organization have funding for deploying and implementing a secure, user-friendly AI system?

How confident are you in your organization’s current endpoint management capabilities to enable employees that are preparing for AI to help them do their best work, regardless of where they work and what device they use?

Rate your organization’s current security foundation for enabling AI-powered work from any endpoint.

Your organization has put the work in preparing for AI and true transformation is underway. The Microsoft 365 team is excited to help you move forward. A couple of key notes for Very Ready organizations:

Having that baseline productivity in place will enable your employees to start exploring the benefits of Copilot as soon as possible. You can find a link to get started at the end of this blog.

Your organization is nearly there. Like Very Ready organizations, your Almost Ready business should consider deploying Microsoft 365, if you haven’t already. Help your employees learn their way around Microsoft 365 now so that it's easier for them to adopt the AI integrations down the road.

A couple of notes for Almost Ready organizations:

It sounds like your organization could benefit from personalized guidance on implementing AI tools. For more information on the requirements and benefits of a secure, user-friendly AI system, Microsoft can help!

A couple of notes to get organizations that Need More Guidance started:

An organization that is preparing for AI and ready to unlock all that's possible, has a strong foundation in security, streamlined endpoint management, and an openness to innovation that improves productivity. With Microsoft 365, your organization can achieve those checkpoints with one complete solution.

With Microsoft 365, your organization's technology landscape will be grounded in Zero Trust, ensuring a secure foundation for all of your endpoints, no matter where access is coming from. A Zero Trust security foundation in turn makes endpoint management safer and less complex, so you can efficiently grant employees access to all the tools they need to be more productive than ever.

Microsoft offers decades in future forward technology expertise, and that experience is built into the features that make Microsoft 365 so powerful. Now, with Copilot, your organization can extend the capabilities of Microsoft 365 even further.

Microsoft 365 delivers a secure, productive working experience. With Copilot, it becomes an intuitive, next-generation productivity partner that works alongside your employees, empowering them to collaborate and work more efficiently to become more productive contributors to business outcomes.

Copilot can empower your team to tap into the rich functionality available throughout Microsoft 365, making employees better at what they’re already good at and mastering what they’ve yet to learn. It integrates seamlessly with the apps your teams already use and is designed to unlock their untapped potential.

Ultimately, Copilot elevates the powerful capabilities of Microsoft 365 to enhance your workforce’s productivity, creativity, and skills—benefits your organization can experience when you make the transformation to AI.

Sources: 1 “Will AI Fix Work?” Work Trend Index, May 9, 2023. Microsoft. https://www.microsoft.com/en-us/worklab/ work-trend-index/will-ai-fix-work. 2 “The New Performance Equation in the Age of AI.” Work Trend Index, April 20, 2023. Microsoft. https://www. microsoft.com/en-us/worklab/work-trend-index/the-new-performance-equation-in-the-age-of-ai. 3 “A Whole New Way of Working.” Work Trend Index. Microsoft. https://www.microsoft.com/en-us/worklab/ai[1]a-whole-new-way-of-working.

©2023 Microsoft Corporation. All rights reserved. This document is provided “as-is.” Information and views expressed in this document, including URL and other Internet website references, may change without notice.

Today's many technological revolutions are changing the business environment, almost beyond recognition. When it comes to the financial sector, artificial intelligence (AI) is finally addressing some long-pressing compliance issues.

Out of the $35.8 billion projected expenditures on AI across all industries in 2019, banks and other financial institutions are investing $5.6 billion in AI. This sum will go into things such as prevention systems, fraud analysis, investigations, and automated threat intelligence. Alongside retail, manufacturing, and healthcare providers, the banking sector is the top spender in AI.

This investment isn't without merit either, as the McKinsey Global Institute estimates that the financial sector could generate more than $250 billion over the coming years. It will be a result of improved decision making, better risk management, and personalized services. Despite these projections, many financial firms are cautious when it comes to implementing AI. But those that want a competitive advantage need to overcome this instinct and benefit from what artificial intelligence has to offer.

When it comes to lead handling and distribution, most banks employ a "round robin"-type system where every lead officer is assigned an equal number of leads in circular order and without any priority. But NBKC Bank, a midsized financial institution based in Kansas, introduced AI into the process.

They realized that some loan officers performed better in the morning while others in the evenings. To that end, they've implemented a platform that distributes leads based on the officers' peak efficiency times. While a quarter of leads are assigned randomly, the rest are assigned based on this intelligent system. And while it still takes into account individual workloads so that everyone gets an equal number, NBKC Bank managed to improve their loan officers' performance by 65% and their closing rates by 10 to 15%.

Various statistical models have been used to evaluate risk by financial institutions for some time now. The most significant difference today, however, is that the use of such algorithms is much more extensive than it was in the past. Likewise, the amount and type of data available are also much more considerable than in previous years. All of these put together, coupled with the introduction of AI and machine learning (ML) will result in solving many problems.

Fraud analysis is one such example. By using AI, banks and other financial institutions will be able to spot frauds faster by detecting unusual activity in real-time. Similarly, AI can detect and filter out fraudulent or, otherwise, high-risk applications. Agents will, thus, only have to review those that have made it past the system, significantly increasing their overall effectiveness.

Alternatively, AI can use alternative sources of data, allowing banks to offer lending products to new groups of people. In the future, AI is predicted to take on even more complex tasks such as deal organization or Financial contract reviews.

Sumitomo Mitsui Banking Corp (SMBC), a global financial organization, is one institution that's deploying AI for its customer service. It makes use of IBM Watson, a question-answering computer system, that's able to monitor all call center conversations, automatically recognizing questions and providing operators with real-time answers.

The introduction of Watson into the mix, the cost of each call reduced 60 cents, with equates to over $100,000 in annual savings for the bank. The system also managed to increase customer satisfaction by 8.4%.

SMBC also uses IBM Watson for employee-facing interactions, answering questions that staff members may have about internal operations. The AI system is also used to deal with a variety of cybersecurity issues.

Investing in AI should be on every financial institution's priority list going forward. Nevertheless, knowing how to navigate all implementations and compliance issues can prove to be a challenge. With Managed Solution, you can find the application that will best suit your needs. Contact us today for more information.

[vc_row][vc_column][vc_empty_space height="20px"][vc_column_text]To download the full magazine and read the full interviews, click here.

Dr. Claire Weston is an accomplished and dedicated scientific leader with a track record of success in cancer research. She was awarded a PhD in from Cambridge University in the UK and has lead teams and projects focused on cancer biomarkers in both large pharma and start-up environments. Claire founded Reveal Biosciences in 2012 and has since demonstrated strong year-on-year growth. She has authored numerous peer-reviewed publications in leading journals including Science, and is a respected member of multiple professional organizations including the Digital Pathology Association.

Reveal Biosciences is a computational pathology company focused on tissue-based research.

When I was a child I went to a local science day and watched a scientist pour liquid nitrogen onto the floor. The liquid nitrogen changed from liquid to gas, something I’d never seen before, and I thought it was amazing! It really initiated my interest in science. I love biotechnology because it's at the interface of science and technology, and solves real world problems.

Several years ago I was working at a different company developing a biomarker-based test for breast cancer. As part of that test, we sent a set of 150 patient slides to three different pathologists to review and provide a diagnosis. We then compared those results to our quantitative biomarker test. What really struck me at the time was the variation in the results that we got back from the pathologists. These are all very qualified, experienced pathologists, yet they didn't agree on the results for all the different patients. This is important because the way the patients are treated is often dependent on the way that the pathologist reviews the slide. It became clear that taking a quantitative, computational approach could help provide more accurate and reproducible data to benefit patients. This became one of the driving missions of our company.

We provide data from microscope slides or pathology samples that can benefit research, clinical trials, and patients. For example, we generate quantitative pathology data to help pharmaceutical companies develop therapeutic drugs, we use it for clinical trials to increase precision and stratify patient groups, and we're also in the process of building pathology data applications to help pathologists diagnose disease in a way that will ultimately benefit patients.

Click here to watch more videos.

We are fairly unique in that we have a scientific team in the lab doing pathology and a computational team of data scientists and software engineers who are developing our AI-based platform. Our ImageDx platform includes models to generate very quantitative data and diagnostic outputs that can be applied to many different diseases. The products that we are working on are unique and differentiate us, but the main driver is the quantitative pathology data that we generate.

We've been using traditional machine learning to identify and quantify cells from images for a while, but in the last few years AI has advanced significantly. It's impressive to see how well it works in pathology images. We've made the natural evolution from more traditional machine learning into AI. Compute power is now more readily available which means that we can generate data from one patient slide in minutes rather than the days or weeks it used to take. This sea change in computational speed means that the data we generate is more meaningful and relevant to routine pathology workflows.[/vc_column_text][grve_video video_link="https://www.youtube.com/watch?v=apAy6ZRi11w"][vc_column_text]Click here to watch more videos.

There's a huge shortage of pathologists worldwide. Even in the US where we have very highly qualified pathologists we’re heading for a retirement cliff, and less pathologists are coming through residency to maintain their numbers. This is particularly evident in rural areas where there's a real shortage of expertise. Having a cloud-based approach will help address some of those problems.

I'm excited by the potential for AI in a cloud-based platform to bring advanced pathology expertise to anywhere with internet access. Hospitals or pathology labs throughout the world could upload an image from a microscope slide into the cloud, and that image can be analyzed to generate advanced diagnostics. Countries with limited resources often have the ability to generate the most basic kind of microscope slide, but they sometimes lack the ability to do the more advanced diagnostics. The possibility to do so is going to revolutionize pathology and be impactful for healthcare globally. This should also benefit patients in the US by helping to lower the cost of healthcare.

The application of AI in pathology is a very new thing. We've been developing this for a while and we're launching the first products in the clinic for patients in 2019. We are also building more enhanced pathology models by integrating other data sources. We’re finding that we can use AI to detect aspects of cancer that are not obvious just by looking down a microscope. For example, we're detecting small changes in the texture of the nucleus of cells or small cellular changes that you wouldn't necessarily notice by eye but can be predictive or prognostic of disease. I think this is going to be really impactful for personalized medicine.[/vc_column_text][/vc_column][/vc_row][vc_row][vc_column heading_color="primary-1"][vc_empty_space][grve_callout title="Tech Spotlight Interviews" button_text="Learn more" button_link="url:http%3A%2F%2Finfo.managedsolution.com%2Fc-level-interview-registration||target:%20_blank|"]IT is a journey, not a destination. We want to hear about YOUR journey!

Are you a technology innovator or enthusiast?

We would love to highlight you in the next edition of our Tech Spotlight.[/grve_callout][/vc_column][/vc_row]

Chat with an expert about your business’s technology needs.