Copilot vs ChatGPT has quickly become one of the most talked-about comparisons as AI has transformed from merely a niche tool, into a central part of how teams operate. From marketing and legal to finance and HR, AI is now embedded in workflows across nearly every department. Tools like ChatGPT and Microsoft Copilot are leading the charge, helping teams automate tasks, generate content, and analyze data faster than ever.

But with rapid adoption comes real concern, especially around security. If you’re exploring Copilot for business or considering ChatGPT for work, it’s important to understand how each tool handles data, privacy, and compliance. This post breaks down the key differences between ChatGPT vs Copilot, how to use AI at work, with a focus on enterprise security, real-world use cases, and what teams need to know before choosing a platform.

Is ChatGPT Safe to Use for Work?

The short answer is: it depends on how you’re using it.

ChatGPT Enterprise offers SOC 2 compliance, encryption, and admin tools like SSO and domain verification. It also allows organizations to opt out of having their data used to train models.

But the free version of ChatGPT doesn’t offer the same protection. And that’s where most of the risk lies. Employees often paste sensitive data into ChatGPT without realizing it could be retained or used to train future models. This includes customer emails, product roadmaps, and even contract language.

A Look at ChatGPT Security Breaches

ChatGPT has faced several notable security incidents:

- In March 2023, a bug exposed some users’ chat history and partial payment data.

- In June 2023, over 101,000 ChatGPT credentials were found for sale on the dark web, compromised by malware on users’ devices.

- In December 2023, researchers extracted over 10,000 memorized training examples, including personal data, by exploiting prompt repetition.

- Samsung and Apple both banned ChatGPT internally after employees shared sensitive company code.

These incidents highlight why ChatGPT business security needs to be taken seriously. Even if the platform itself isn’t breached, user behavior can create vulnerabilities.

Microsoft Copilot: Built for Enterprise Security

Microsoft Copilot is embedded directly into Microsoft 365 apps like Word, Excel, Outlook, and Teams. It doesn’t use organizational data to train its models and inherits the same compliance and security controls already in place across Microsoft 365.

Copilot respects existing permissions through Azure Active Directory, meaning sensitive documents are only accessible to authorized users. It is also aligned with GDPR, HIPAA, and ISO 27001 standards, making it a safer choice for regulated industries.

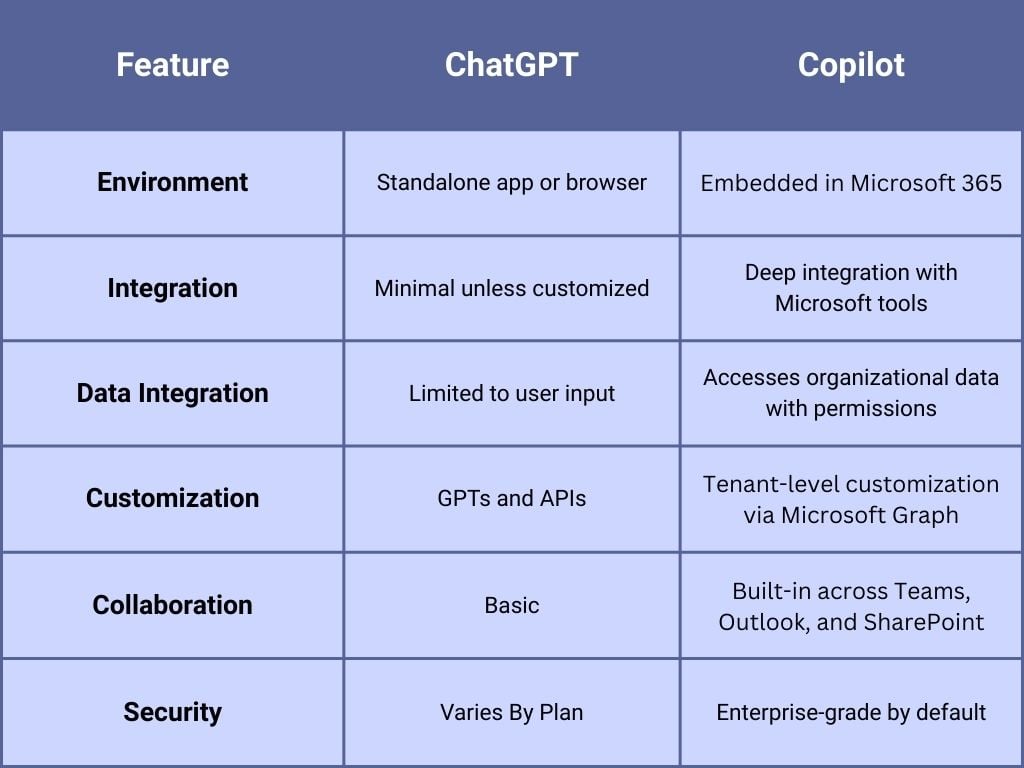

General Usage Differences: ChatGPT vs Copilot

ChatGPT is great for creative tasks, coding support, and general Q&A. Copilot excels in structured environments where productivity and compliance are key.

Which Tool Works Best for Which Roles?

ChatGPT is ideal for:

- Marketing teams: Brainstorming, drafting content, generating ideas

- Developers: Code generation, debugging, technical Q&A

- Students and freelancers: Research, writing, learning support

Microsoft Copilot is best for:

- Legal teams: Reviewing contracts with access-controlled documents

- Finance teams: Analyzing spreadsheets, forecasting, summarizing reports

- Operations and HR: Automating routine tasks, summarizing meetings, managing workflows

Dive into more use-cases for Copilot in business here. It’s important to note that the rapid advancement of Copilot’s powerful creation tools and Analyst & Research agents has led Microsoft Copilot to quickly becoming a strong contender for Marketers, Developers, Freelancers and Students. Watch our latest webinar recap on Copilot Create and register for our upcoming session covering Copilot Analyzer to delve into these features with expert guidance.

If your team is already using Microsoft 365, Copilot is the natural fit. If you need flexibility and creative support, ChatGPT can be a powerful tool, especially with the enterprise version.

What Not to Enter into ChatGPT

Even with enterprise controls, there are things you should never share with ChatGPT:

- Sensitive company data: Internal documents, source code, client information

- Creative works and IP: Proprietary content, unpublished writing, designs

- Financial information: Bank details, tax IDs, payment credentials

- Personal data: Names, addresses, phone numbers, government IDs

- Usernames and passwords: Never store or test credentials in ChatGPT

- Confidential chats: Even your own prompts can be surfaced in other users’ sessions

In general, the best guidance for using ChatGPT at work is to treat it like as if it’s public forum.

Final Thoughts

Though both tools are built on OpenAI’s GPT-4, they serve very different purposes. ChatGPT is versatile and accessible, great for creative exploration and flexible support. Microsoft Copilot, on the other hand, is context-aware, secure, and deeply integrated into enterprise workflows.

If you’re part of an organization using Microsoft 365, Copilot for work is likely the safer and more seamless choice. If you’re a freelancer, marketer, or developer, ChatGPT for business can be a powerful ally so long as you remain mindful of what you share.

Security isn’t just a feature. It’s a foundation. As AI in the workplace becomes more common, choosing the right tool means balancing innovation with protection. If you want to learn more about copilot, check out our events page for our latest webinar series to get on-demand insights and register for the upcoming sessions!

If you’re interested in learning how Copilot can benefit your business, click here to chat with an expert and we’ll be happy to walk you through everything you need to know.